Scholarship at the academic level is rife with issues ranging from issues with publications and the related disruption of open access resources, to challenges with peer review and associated evaluation of impact factors.

News & Sources

Recent News & Developments

- SUNY Cancels Big Deal with Elsevier – the State University of New York Libraries Consortium announced on April 7 that it will not renew its bundled journal subscription deal with publisher Elsevier.

- Norwegian Universities Ditch Elsevier – Norway is the latest European country to cancel its subscription deal with Elsevier, following Germany and Sweden…..

- Plan S: Making full and immediate Open Access a reality – Plan S is an initiative for Open Access publishing that was launched in September 2018. The plan is supported by cOAlition S, an international consortium of research funders. Plan S requires that, from 2020, scientific publications that result from research funded by public grants must be published in compliant Open Access journals or platforms….

- ResearchGate and Springer Nature embark on pilot to deliver seamless discovery and an enhanced reading experience – ResearchGate and Springer Nature are partnering to pioneer innovative access models for scientific content in the rapidly evolving research ecosystem. Full-text articles published in select Nature journals since November 2017 will be rolled out to researchers’ ResearchGate profiles starting now and completed by March 7, making it easier to read or download research on or off campus from that moment on….

- UC Drops Elsevier – after months of negotiating over open-access fees and paywalls, the University of California System follows through on threat to cancel its journal subscription deal with Elsevier…

- UC terminates subscriptions with Elsevier in push for open access to publicly funded research – from the UC Office of Scholarly Communication

Sources for News and Commentary

- ACRLog – blogging by and for academic and research librarians

- ARL Policy Notes – a blog of the Association of Research Libraries Influencing Public Policies strategic direction.

- Force11: The Future of Research Communications & e-Scholarship – a community driven to facilitate open knowledge sharing

- Studyfy – libraries, scholarship and publishing

- Latest News from SPARC – the Scholarly Publishing and Academic Resources Coalition

- Library of Congress Blog

- Scholarly Communications @ Duke

- The Scholarly Kitchen – What’s Hot and Cooking In Scholarly Publishing

- The Taper Copyright and Information Policy at the UVA Library

Publishing

Borrowed from The Association of College & Research Libraries Scholarly Communications Toolkit.

Economics of Publishing

Paywall: The Business of Scholarship

Paywall: The Business of Scholarship is a documentary which focuses on the need for open access to research and science, questions the rationale behind the $25.2 billion a year that flows into for-profit academic publishers, examines the 35-40% profit margin associated with the top academic publisher Elsevier and looks at how that profit margin is often greater than some of the most profitable tech companies like Apple, Facebook and Google.

Directed by Clarkson’s own Jason Schmitt.

The Serials Crisis

The Economics of Scholarly Publishing: The Serials Crisis

Libraries and the faculty and institutions they serve are participants in the unusual business model that funds traditional scholarly publishing. Faculty produce and edit, typically without any direct financial advantage, the content that publishers then evaluate, assemble, publish and distribute. The colleges and universities that employ these faculty authors/editors then purchase, through their libraries, that packaged content back at exorbitant prices for use by those same faculty and their students. This unusual business model where the “necessary inputs” are provided free of cost to publishers who then in return sell that “input” back to the institutions that pay the salaries of the persons producing it has given rise to an unsustainable system begging for transformation.

The subscription prices charged to institutions has far outpaced the budgets of the institutions’ libraries who are responsible for paying those bills. Years of stagnant university funding and the economic downturn rendered many library budgets flat, while journal pricing continued to rise. This problem became known as the “serials crisis.” Another element of the serials crisis that has been subject to discussion and debate has been the “big deal,” which is when large commercial publishers sell their complete list of titles to libraries at less than what a la carte pricing for titles would be individually. Some postulate that the big deal has helped negate the effects of the serials crisis while others argue that it actually hurts more than it helps.

Over the years, librarians, publishers, scholars, and economists have debated the causes and status of as well as the solutions to the the unusual economic system funding journal publishing and the resulting serials crisis. Some of this debate and the data presented to support the positions of stakeholders in scholarly publishing are presented below. You can also find further reading on the serials crisis on DigitalKoans.

A primary response to the serials crisis has been the development and grown of open access publishing. Several publishers and groups have experimented with alternative journal publishing schemes. Open access publishing in general, as well as the economics of open access, can be found under the Open Access section of the toolkit.

Viewpoints on the Serials Crisis

Economic Analysis of Scientific Research Publishing – 2003 report by the Wellcome Trust that concludes that the publishing of scientific research does not operate in the interests of scientists or the public good, but rather is dominated by a commercial market intent on improving its market position.

The Business of Academic Publishing: A Strategic Analysis of the Academic Journal Publishing Industry and its Impact on the Future of Scholarly Publishing (Electronic Journal of Academic and Special Librarianship, Winter 2008)

The Big Deal: Price Not Cost (InfoToday, 2011)

The Serials Crisis is Over (2013) – blog post by librarian Jeffrey Beall contending that the serials crisis is over. A response (“Of course the serials crisis is not over!“) to this blog post was written by Mike Taylor.

Data on Serials Pricing and the Serials Crisis

Evaluating Big Deal Journal Bundles (PNAS, 2014) – data collected by authors, through FOIA requests, demonstrates price discrimination frequently occurs at the hands of for-profit publishers.

Deal or No Deal? Evaluating Big Deals and Their Journals (College & Research Libraries, 2013)

ARL Expenditure Trends, 1998 – 2018 – demonstrates how the cost of materials, including serials and monographs, has far outpaced library budgets.

Ted Bergstrom’s Research on Journal Pricing – economist Ted Bergstrom has published numerous papers and has created databases analyzing journal cost effectiveness (comparing citation rates to journal prices) and big deal contracts. His research and resources are linked from his website.

Access to Research

The launch of the internet nearly 30 years ago allowed for the development of the concept of open access. Early open access initiatives included the launch of the online subject repository arXiv in 1991, the publication of several free, peer reviewed online journals in the early 1990s, and the development of the National Institute of Health’s repository PubMedCentral in 2000. Then, in 2002 and 2003, three distinct meetings took place in Budapest, Berlin and Bethesda which gave rise to a formal and still globally accepted definition of “open access.” The widely accepted definition of open access literature is as follows: Open access literature is digital, online free of charge, and free of most copyright and licensing restrictions. (1)

Although costs for digital publishing can be lower than print publications, open access publishing is not free. Instead of charging the reader for access through purchase or subscription, alternative business models have arisen that provide the publishers with the financial means for providing access to scholarship. One cost recovery model is the implementation of article processing charges (APCs). These charges are paid by the author (who may get assistance from research grants, his university, or his library) prior to publication. Production costs can also be offset by the sale of memberships, add-ons and enhanced services by the publisher. In some cases, journals are fully subsidized by a sponsoring institution, funder or other organization without charging authors or readers. However, while open access publishing has the potential to reduce costs, this is not the only driving force behind open access advocacy. The benefits to individual scholars, related institutions, scholarly communication, and the general researching public are also primary motivating factors.

There are two primary routes in which open access literature can be published or otherwise made available. These two routes are frequently described as “gold open access” and “green open access.

- Gold Open Access is when an author publishes in an Open Access Journal. The article is published in an open access journal that provides immediate open access to all of its articles on the publisher’s website. The term “Hybrid Open Acess” is also sometimes used to describe an open access model where a journal provides gold open access only for those individual articles for which an open access publishing fee has been paid. A common misconception about open access publishing is that it is not peer reviewed. However, many open access journals adhere to the same strict review process as more traditional journals. Peer review is medium-independent, as relevant to online journals as to print journals. It can be carried out in cost effective ways with new supporting software and technologies.

- Green Open Access is when an author archives a version of their work in an open access repository, irrespective of where it is finally published. After publication, author self-archives a version (peer-reviewed postprint) of the article for free public use in their institutional repository (IR), in a central repository (e.g., PubMed Central), or on some other open access website.

1 This definition comes from Peter Suber, who is considered the foremost leader and expert on the topic. His Overview of Open Access is an excellent brief explanation of what open access publishing is and why it is an important initiative.

Institutional Support

There are many ways that libraries and other institutions can support open access, ranging from simply providing information to enacting open access policies and establishing institutional repositories:

- Preparing guides to open access

- Encouraging dialog about open access, e.g., through blogs/newsletters

- Holding Open Access Week events to promote awareness

- Endorsing statements on open access such as the Berlin Declaration

- Establishing open access publication funds

- Establishing institutional repositories

- Establishing open access databases

- Issuing open access resolutions and enacting open access policies

- Purchasing institutional subscriptions that provide discounts on open-access publication fees

- Negotiating open access agreements with publishers

- Providing copyright advisory services to researchers

- Providing assistance in the conversion of institution-based journals to open access journals

- Forming academic centers devoted to scholarly publishing

For more reading on how libraries and other institutions can make changes, see the following:

- Librarians and Academic Libraries’ Role in Promoting Open Access: What Needs to Change? – from College & Research Libraries, 2024

- How can librarians support open research? – from Taylor & Francis Librarian Resources

- Open Access Publishing Support – MIT Libraries guide

Further Resources

Borrowed from The Association of College & Research Libraries Scholarly Communications Toolkit

The History of the Open Access Movement:

- Open Access Directory Timeline – for more details on the history of the open access movement

- Evolution of Open Access: A History – from SciElo

- History of Open Access – presentation slides from Paul Royster (University of Nebraska) (2016)

- “Development of Open Access Journal Publishing from 1993-2009” – PLoS ONE article (2011)

- Three declarations on Open Access:

- Budapest Open Access Initiative (Feb. 2002)

- Bethesda Statement on Open Access Publishing (Apr. 2003)

- Berlin Declaration on Open Access (Oct. 2003)

- Access Alone Isn’t Enough: Revisiting Calls for Discovery, Infrastructure, Technology, and Training

- Ask the Community (and Chefs): How Can We Achieve Equitable Participation in Open Research?

- Ask the Community (and Chefs): How Can We Achieve Equitable Participation in Open Research? – Part 2

- The coming digital divide: What to do, and not do, about it

- The Evolving Landscape of Research Access and its Impact on the Global South

- INASP – Access to Research in the Global South

- Information Poverty – UNICEF

- Pew Research Center – Digital Divide

- Yes, We Were Warned About Ebola –

In 2008, the National Institutes of Health (NIH) adopted a policy requiring all NIH funded research to be deposited within 12 months of publication into the repository PubMedCentral. In 2013, the White House, through the Office of Science and Technology Policy, issued an executive directive mandating that U.S. Government agencies with annual extramural research and development expenditures over $100 million make the results of taxpayer-funded research—both articles and data—be made freely available to the general public with the goal of accelerating scientific discovery and fueling innovation. Those agencies have begun adopting and issuing plans for the deposit of scholarly articles and data into openly accessible repositories. Similarly, a few states and several private funders have proposed and enacted legislation and policies mandating public access of funded research. Libraries providing support to faculty and researchers subject to these mandates can use this as an opportunity to discuss author’s rights and open access with authors and the importance and impact of retaining rights to one’s own work as well as ensuring the widest reach and impact of scholarship.

More Reading

- ARL Guide to Public Access Mandates – ARL web page that compiles links to the full text of agency plans accompanied by an ARL-authored summary of individual agency plans

- SPARC – Public Access Directive – up-to-date list of links to agency plans and further coverage on the Executive Directive and other public access initiatives

- OSTP Responses Spreadsheet – crowdsourced spreadsheet tracking agency plans and their requirements

- Data Sharing Requirements Database – joint project of SPARC and Johns Hopkins University, allows users to search database for data sharing requirements by agency

- Overview of OSTP Responses (FigShare) – chart providing a visual summary of requirements of individual agency public access policies

- Public Access Policies FAQ – crowdsourced FAQ about the requirements of public access mandates, open access, and similar issues. Developed by libraries for use by libraries.

- Expanding Public Access to Federally Funded Research: Implementing the OSTP Memo – YouTube panel presentation (2013) at Columbia University as part of Research without Borders programming that discusses the requirements of the public access directive and the publisher (CHORUS) and library (SHARE) responses to the directive.

- Support When it Counts: Library Roles in Public Access to Federally Funded Research – 2013 Charleston Conference presentation slides on how libraries can support public access. See also the accompanying paper at http://docs.lib.purdue.edu/charleston/2013/Communication/2/

- Public Access Policy Support Programs at Libraries: A Roadmap for Success – paper (2010) focused on library support of compliance with NIH mandate, but useful information for supporting other public access mandates

- Publication of Government-Funded Research, Open Access, and the Public Interest – article (2016) on public access mandates in the context of the larger open access initiative. Nice overview of the issues and current state of programs and initiatives to further the principles of open access.

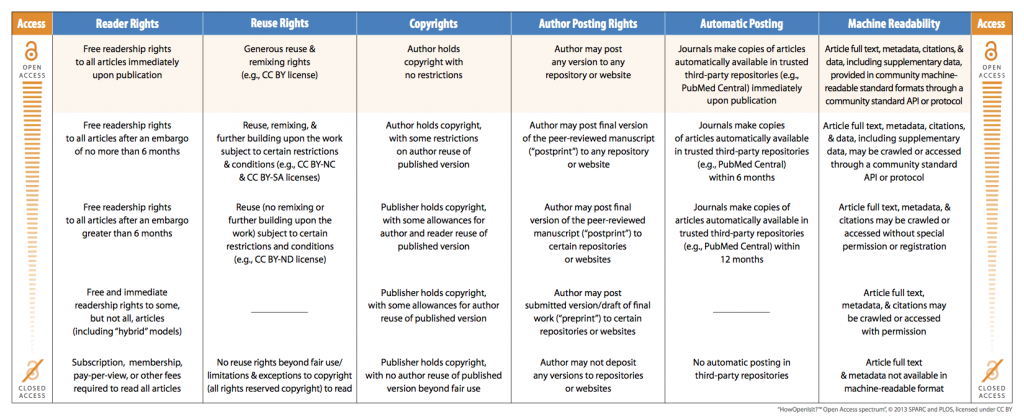

The Open Access Spectrum was developed by SPARC in collaboration with PLOS and OASPA. This tool displays the core components of open access across a spectrum indicating how closed or open a journal is based upon its policies on reader rights, reuse rights, copyrights, author posting rights, automatic posting, and machine readability. The How Open Is It? guide can be downloaded and shared with faculty and students who have questions about how to evaluate open access journals. The Open Access Spectrum Evaluation Tool can also be used to filter and locate journals based upon publisher policies on these key components.

Open Science & Open Data

The four fundamental goals of open science:

- Transparency in experimental methodology, observation, and collection of data.

- Public availability and reusability of scientific data.

- Public accessibility and transparency of scientific communication.

- Using web-based tools to facilitate scientific collaboration.

From: Dan Gezelter, Openscience.org

More

- Open Science & Open Data – Clarkson University Libraries subject guide

- Road to Open Science – Episode 1 – A Social Dilemma

- The OpenScience Project

- Estimating the Reproducibility of Psychological Science – reproducibility is a defining feature of science, but the extent to which it characterizes current research is unknown. We conducted replications of 100 experimental and correlational studies published in three psychology journals using high-powered designs and original materials when available. Replication effects were half the magnitude of….

- The Irreproducibility Crisis of Modern Science: Causes, Consequences, and the Road to Reform – a reproducibility crisis afflicts a wide range of scientific and social-scientific disciplines, from epidemiology to social psychology. Improper use of statistics, arbitrary research techniques, lack of accountability, political groupthink, and a scientific culture biased toward producing positive results together have produced a critical state of affairs. Many supposedly scientific results cannot be reproduced in subsequent investigations.

- Opinion: Is science really facing a reproducibility crisis, and do we need it to? – efforts to improve the reproducibility and integrity of science are typically justified by a narrative of crisis, according to which most published results are unreliable due to growing problems with research and publication practices. This article provides an overview of recent evidence suggesting that this narrative is mistaken, and argues that a narrative of epochal changes and empowerment of scientists would be more accurate, inspiring, and compelling.

- A reproducibility crisis? – the headlines were hard to miss: Psychology, they proclaimed, is in crisis.

- Science’s “Reproducibility Crisis” is being used as political ammunition – David Randall and Christopher Welser are unlikely authorities on the reproducibility crisis in science. Randall, a historian and librarian, is the director of research at the National Association of Scholars, a small higher education advocacy group. Welser teaches Latin at a Christian college in Minnesota. Neither has published anything on replication or reproducibility.

- Center for Open Science

- Open Science Framework

- The OpenScience Project

- The Road to Open Science Podcast – in the podcast series The Road to Open Science, hosts Sanli Faez and Lieven Heeremans follow the path to adapting open science practices through the perspective of researchers from different disciplines. In each episode they talk to people within the academic community about their research, initiatives, or experiences in relation to open science.

Evaluating Research

Borrowed from The Association of College & Research Libraries Scholarly Communications Toolkit.

In the past, peer reviewed journals have been the standard for measuring the quality and importance of scholarly literature. However, the growing number of journals and articles being published has placed significant strain on this process. Part of the problem is attributable to a rate of publication that is far outpacing the pool of available referees. With scant incentive available for referees in the form of credit in the tenure and promotion process, this shortage is expected to continue.

Despite its strong track record in filtering research, the peer review system has come to show signs of weakness. The authors of the altmetrics manifesto point out that “peer review…is slow, encourages conventionality, and fails to hold reviewers accountable…[and] given that most papers are eventually published somewhere, peer-review fails to limit the volume of research.” This prognosis is not good and is unsustainable over the long term. In response, new alternative forms of peer review have begun to develop that take a more open and transparent approach to the traditional peer review model:

Open Peer Review: Open peer review begins with an author or editor posting an unpublished manuscript online in a comment-enabled web environment, and then inviting peers to contribute comments and criticisms. The argument is that open peer review not only allows for transparency in the peer review process, but also enables a wider variety of input, including cross-discipline critique and more technical, “non-scholarly” input.

- Dr. Jon Tennant on an Introduction to Open Peer Review Process – The European Council of Doctoral Candidates and Junior Researchers (2018)

- For a wonderful bibliography on alternative forms of peer review, including open peer review, please see the “Transforming Peer Review” bibliography.

- Presentation Slides – Open Peer Review: Bold Steps Toward Change in Scholarly Communication (2012)

- ImpactStory blog post on open peer review – what it is and how to become an effective open peer reviewer

- Open Review: A study of contexts and practices – white paper by Kathleen Fitzpatrick and Avi Santo

Post-Publication Peer Review:

- Altmetrics are a form of post-publication peer review

- F1000 – identifies and evaluates the most important articles in biology and medical research publications

- PubPeer – tool for commenting on journal articles

- RetractionWatch – blog on retractions of scholarly articles, which is a function of post publication review

Measuring the Impact of Research

Borrowed from The Association of College & Research Libraries Scholarly Communications Toolkit.

Background

![]()

– A Critical Review of a Traditional System

One of the many challenges faced by scholars is to identify the impact their scholarship is having in their respective disciplines. This need is frequently driven by promotion and tenure. Alternatively, they may be interested in locating the most important research being done in their field. What are the important ideas now shaping opinions, methods, and research? The impact of published scholarship is also important for institution-level evaluation conducted by university and college administrations and for collection building and maintenance projects conducted by the library.

Traditionally, journal impact factors have been utilized to calculate the impact of scholarship. The impact factor came into use during the 1970s through the work of Eugene Garfield. Calculated as the average number of cited articles divided by the number of cite-able items in a journal in the past two years, the impact factor illuminates which journals are most influential in a scholar’s given field. In other words, it is a measure of how often a journal is cited by other journals in a field.

The journal impact factor is still used today as a measure of the relative importance of a journal within its field. However, there has been growing dissatisfaction with reliance upon the traditional journal impact factors due to the time lag in assessment that they impose since journals take time to accrue citations, the prospect of gaming the system, and the risk of elevating the rank of an article that is cited as a cautionary or corrective tale to the scholarly community. As a result different metrics have emerged including author and article level metrics and alternative metrics, which rely upon social media, reference/bibliography creation software and other non-traditional citations. Additionally, tools are available to researchers to create profiles that track their individual impact and correctly and consistently identify what research outputs are the product of their efforts.

This section of the Toolkit does not provide guides to finding and using the variety of traditional journal, article and author level metrics. Rather this section provides librarians with resources to assist them in thinking critically about traditional metrics to help facilitate conversations with the scholars they serve about the role of impact factors in evaluating scholarship, whether for tenure and promotion or for finding and evaluating research in one’s field. This section will also provide tools and resources on the growing field of altmetrics as well as tips and resources for assisting scholars with maximizing the identification and impact of their research.

The following resources offer a critical look at traditional measurements of impact:

- Eugene Garfield – The History and Meaning of the Journal Impact Factor. Journal of the American Medical Association. v. 295, no. 1 (2006). (For more on the work and scholarship of Eugene Garfield, see the Garfield Papers Collection at the University of Pennsylvania)

- Stephen Curry. Sick of Impact Factors. Occam’s Typewriter (Blog). 2012. See also the follow-up to the post here.

- Jason Priem and Bradley Hemminger. Scientometrics 2.0: Toward new metrics of scholarly impact on the social Web. First Monday. v. 15, no. 10 (2010).

- Bjorn Brems et al. Deep Impact: Unintended consequences of journal rank. Frontiers in Human Neuroscience. (2013).

- San Francisco Declaration on Research Assessment (DORA) – statement crafted by group of editors and publishers of scholarly journals on the need to improve the way research outputs are measured and evaluated. Site includes links to news stories covering the DORA initiative. See Kent Anderson’s post in the Scholarly Kitchen blog for a viewpoint from the other side on DORA.

- Adam Eyre-Walker and Nina Stoletzki. The Assessment of Science: The relative merits of post-publication review, the impact factor and the number of citations. PLoS Biology. (2013)

- ScienceLive: Should We Ditch the Impact Factor? – YouTube webinar(2013)

- Caspar Chorus and Ludo Waltman. A Large-Scale Analysis of Impact Factor Biased Journal Self-Citations. PLoS ONE. (2016).

- Vincent Lariviere et al. A Simple Proposal for the Publication of Journal Citation Distributions. bioARxiv pre-print (2016).

As more information is disseminated electronically, researchers will come to interact with that information on the open web in a variety of ways and through many different platforms and media types. Altmetrics measures how many times a journal article is downloaded, shared, commented on, and cited in social media outlets and can provide a meaningful indicator of the impact an article has among different user populations. One of the founders of the study of altmetrics Jason Priem and Bradley Hemminger have compiled a list of sources from which data can be collected and relayed back to scholars as meaningful impact data. These sources fall into seven categories:

- Social bookmarking sites (Delicious, CitULike, Connotea)

- Reference managers (Mendeley, Zotero)

- Recommendation sites (Dig, Reddit, Friendfeed, Faculty of 1000)

- Publisher hosted comment spaces (PloS, British Medical Journal)

- Microblogging (Twitter)

- Blogs (WordPress, Blogger, Researchblogger)

- Social networks (Facebook, Nature Networks, Orkut)

Culling usage data from various social sites has several advantages. First, sites which have open APIs (application programming interface) can be accessed immediately for up-to-date usage statistics – an advantage over traditional citations. Second, a growing number of commercial platforms such as ImpactStory, Altmetric.com, and Plum Analytics allow scholars to track usage of their works across several research blogs, journals, and user populations. This allows a granularity of data and presents a broader perspective of overall impact. It is important to note that altmetrics is not meant to replace traditional citation, but is best used in conjunction with citations for an overall picture of scholarly impact.

Additional Readings, Resources and Presentations on Altmetrics:

- Altmetrics: a manifesto

- Evolution of Impact Indicators: From bibliometrics to almetrics

- Overview of the Altmetrics Landscape

- Keeping Up With….Altmetrics

- Riding the Crest of the Altmetrics Wave: How Librarians Can Help Prepare Faculty for the Next Generation of Research Impact Metrics

- PLoS Collections: Altmetrics

- Altmetrics in the wild: Using social media to explore scholarly impact

- 56 Indicators of Impact

- SPARC – Article Level Metrics

- Practical Uses of Altmetrics – How Libraries are Using New Metrics – YouTube video

- NISO – Report on Outcome of Alternative Assessment Metrics Project

There are a growing number of tools available to scholars to maximize their impact, whether through proper identification of themselves as the creator of a product of research or through social media like profile pages highlighting their expertise and output. Libraries can assist scholars in maximizing their impact by promoting author identification tools such as ORCID, sharing data on open access publishing and citation rates, and directing scholars to sites that allow them to develop there own social media like researcher profile pages.

Tips and Tools for Maximizing Impact

- ORCID – ORCID (Open Researcher and Contribution ID) aims to solve the name ambiguity problem in scholarly communications by creating a registry of persistent unique identifiers for individual researchers and an open and transparent linking mechanism between ORCID, other ID schemes, and research objects such as publications, grants, and patents. See also ORCID Author Identifiers: A Primer for Librarians. Medical Reference Services Quarterly (2016).

- The Becker Medical Library at Washington University of St. Louis has put together a very extensive and comprehensive list of tips and tools to help scholars to maximize their impact.

- Maximizing Impact of Research: a handbook for social scientists – London School of Economics

- FigShare – Figshare allows researchers to publish all of their research outputs in seconds in an easily citable, sharable and discoverable manner. All file formats can be published, including videos and datasets that are often demoted to the supplemental materials section in current publishing models. By opening up the peer review process, researchers can easily publish null results.

- Google Scholar Citations -researchers can also create a public profile with their articles and citation metrics. If the profile is public, it can appear in Google Scholar search results when someone searches for your name.

- Article covering the variety of researcher services that are available: Making Sense of Researcher Services. Journal of Library Administration (2016)

Using Open Access to Increase Impact

- Open Access Citation Studies — although this has not been updated since 2013, excellent evidence on how open access increase’s one’s impact

- Article published in 2004 in College & Research Libraries on the citation impact of open access articles: Do Open Access Articles Have a Greater Research Impact?

- Open Access Increases Citation Rate – PLoS Biology article on the increased impact of open access publications

- The Open Access citation advantage: Studies and results to date – 2010 University of Southampton article

Related Subject Guides

Credit

Portions of this page were borrowed from The Association of College & Research Libraries Scholarly Communications Toolkit.

This guide was created under a Creative Commons Attribution-NonCommercial-ShareALike license.