Large Language Models (LLMs), a type of generative artificial intelligence, are everywhere. To be responsible users of these technologies, it is important for users to develop their AI literacy skills. Per Educause’s AI Literacy in Teaching and Learning: A Durable Framework for Higher Education, AI literacy refers to:

- Technical understanding of how AI works and basic terminology

- Considering the ethics of AI tools

- Evaluating tools and their output

- Using tools effectively and appropriately

Table of Contents:

- Pros and Cons

- Benefits of AI

- Concerns with AI

- Evaluating AI Models

- Prompt Engineering

- AI Tools for Research

- More Resources

Terminology

- Artificial intelligence (AI) – a branch of computer science dedicated to developing programs that can discover patterns, make actions, create results based on input, etc. (Midwestern State University, Moffett Library’s Glossary of Artificial Intelligence Terms)

- Neural Networks – “a mathematical system that actively learns skills by identifying and analyzing statistical patterns in data; inspired by the neurons in our brain” per MIT’s Glossary of Terms: Generative AI Basics

- Large Language Models – neural networks that work by forecasting word sequences (MIT’s Glossary of Terms: Generative AI Basics)

- Deep learning models – a type of neural network capable of recognizing more complex patterns (Midwestern State University, Moffett Library’s Glossary of Artificial Intelligence Terms)

- Transformer models – a type of language model and neural network, also classified as deep learning model, that use self-attention mechanisms to focus on the important parts of input and output (Midwestern State University, Moffett Library’s Glossary of Artificial Intelligence Terms)

- Natural Language Processing – a subfield of AI studies focusing on getting machines to understand and generate regular human language rather than requiring specific terminology (MIT’s Glossary of Terms: Generative AI Basics)

- Generative AI – content-generating AI platforms used to create text, video, image, or code output based on the large datasets used as training data, from which they find patterns close to the source material for novel responses (CNET – ChatGPT Glossary: 52 AI Terms Everyone Should Know)

- Hallucinations – incorrect or nonexistent information returned by a generative AI platforms (MIT’s Glossary of Terms: Generative AI Basics)

AI Basics

- An artificial intelligence perspective: How knowledge and confidence shape risk and benefit perception – by Said et al., published in Computers in Human Behavior

- Artificial Intelligence for the Benefit of Everyone – by Hessami et al., published in IEEE

- Gender and content bias in Large Language Models: a case study on Google Gemini 2.0 Flash Experimental – by Balestri, published in Frontiers in Artificial Intelligence

- Artificial Intelligence: Generative AI’s Environmental and Human Effects – from the US Government Accountability Office

- Large language models, explained with a minimum of math and jargon – by Lee & Trott

Pros and Cons

AI is a major talking point, cited as the catalyst for innovation and job loss. Understanding both the pros and cons of this technology and using it mindfully is vital for AI literacy.

Benefits of AI

Customized Learning

Generative AI is being used in education to provide personalized learning content tailored to individual students (AI in School: Pros and Cons), allowing students and instructors to identify and target weaknesses. Students can alter the pace of learning and receive feedback specific to their needs, which is much more challenging for teachers in-person (Washington State University’s Office of the Provost, Benefits of AI).

Taking advantage of customized learning can help students focus on the areas they might be struggling with, especially as these tools are available 24/7. You can always get feedback from an AI tool when it might take far longer to identify your individual needs through traditional systems or cannot access them. This interaction may also be more engaging for students than traditional teaching methodologies (Benefits, Challenges, and Sample Use Cases of Artificial Intelligence in Higher Education from Hanover Research), keeping them more focused on learning.

Some tools can be used as a study partner to help students enhance their own learning. For more tips on using AI to study, check out the How to Study guide.

Multiple Perspectives & Critical Thinking

LLMs can be prompted to engage in role-playing, offering different perspectives for students to challenge their existing knowledge and beliefs (The impact of generative AI on higher education learning and teaching: A study of educators’ perspectives, published in Computers and Education: Artificial Intelligence).

There is evidence that students using LLMs can develop more effective arguments on topics due to the tools’ ability to identify targeted feedback and challenging weaknesses (Investigating the use of ChatGPT as a tool for enhancing critical thinking and argumentation skills in international relations debates among undergraduate students, published in Smart Learning Environments). In turn, this can help students understand complex concepts more completely and encourage their critical thinking skills.

By considering the ethics of LLMs and other forms of generative AI and exploring different aspects of this technology, students can improve their critical thinking skills (The Future Benefits of Artificial Intelligence for Students from URBE University). By being aware of the pros and cons of these tools, you can make an informed decision about when and how to use them.

Big Data Management & Visualization

Depending on the specific tool you are using, you might be able to analyze incredibly large data sets that would otherwise take a very long time to do manually, and can also extract conclusions that are less apparent (Forbes’s Advantages of Artificial Intelligence (AI) in 2025). Artificial intelligence has incredible pattern recognition capabilities, so it can see trends in data that might not be as easily apparent to human eyes.

Some tools are also able to generate visuals of the data, making it easier to see these patterns and evaluate the data. Visualization makes information about large data sets more accessible, allowing you to demonstrate trends and outliers in your data (Data Visualization from the University of Texas Libraries).

Task Automation

One of the biggest advantages to AI is in automating repetitive tasks, like data collection and entry, analysis, and synthesis (Forbes’s Advantages of Artificial Intelligence (AI) in 2025). Industries across all sectors are using AI systems to save time on tasks like analyzing documents, including in cutting-edge research (Thomson Reuters’s Benefit of AI). Researchers use different platforms to complete summaries of articles, a task that would take much longer to complete individually.

One note to keep in mind is that you need to be proficient in any tasks you automate so you are able to recognize any output errors. If you rely on generative AI to automate your tasks without sufficient oversight, you run the risk of false/incorrect information being presented. In professional and academic spaces, this can have a negative impact on your career. It is important to understand how, and when, to use these tools and when to rely on your own knowledge.

Concerns with AI

Incorrect Information

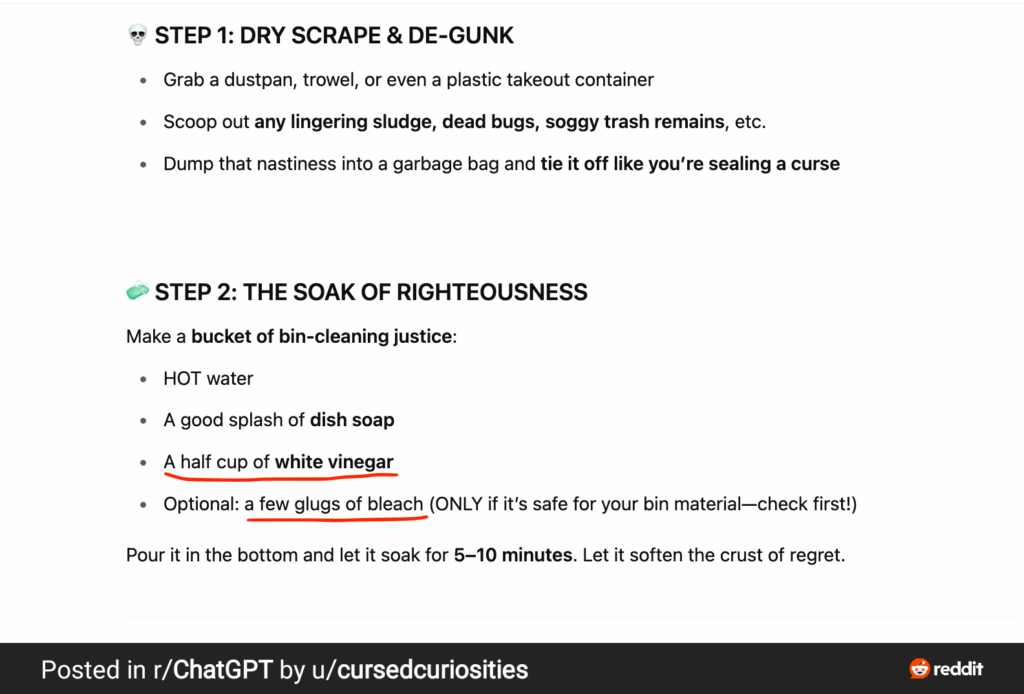

In case you’re not familiar with chemistry, the above picture shows something that is a very bad idea! Combining bleach with vinegar will create highly toxic chlorine gas.

But beyond demonstrating the importance of your high school chemistry course, this example shows how dangerous it is to blindly follow the advice from an LLM.

While many people consider LLMs to be sophisticated search engines, this is very far from the truth. Instead, consider that LLMs are more like “an extremely powerful form of autocomplete” (Foundations of Generative AI, The AI Pedagogy Project). OpenAI includes this disclaimer in their Privacy Policy: “Services like ChatGPT generate responses by reading a user’s request and, in response, predicting the words most likely to appear next. In some cases, the word most likely to appear next may not be the most factually accurate.”

The actual models are based on complex coding, they operate in a “black box,” so no one outside of the companies developing the products is aware of how they function. They are coded to engage users with conversational tones, making them seem like a person. This can make the models seem like authorities on a subject, when in reality they are combining the information they find without automatically reviewing for accuracy or conflicting information (though you can prompt them to do so).

So, how do you know if the information you receive from a chatbot or other LLM is incorrect? Always assume that there is incorrect information in the results you receive. Fact check the information you find using reliable information, like the academic papers or technical reports. Finding the same information from multiple sources is helpful, as is grounding, which refers to combining requests with either web searchers or scholarly source searches (University of Arizona Libraries – Student Guide to ChatGPT). This is an option in many major models, including ChatGPT, Claude, and Perplexity AI. Doing so may reduce the frequency of hallucinations or inaccuracies.

Eroding Critical Thinking

While AI can improve critical thinking through mindful, thoughtful use, there is also the potential for critical thinking skills to degrade when tools are used mindlessly. In their recent article on the effect of ChatGPT on nursing education, Peltonen et al. advise that “overreliance on generative AI technologies can potentially decrease the effectiveness of student learning and critical thinking development, which may generate unintended consequences for professional practice” (The ChatGPT Effect: Nursing Education and Generative Artificial Intelligence, p. e41). While this study was restricted to ChatGPT, other LLMs may have the same consequences on critical thinking.

Other research echoes these concerns, with studies indicating that “when individuals rely heavily on AI for information retrieval and decision making, their ability to engage in reflective problem solving and independent analysis may decline” (To Think or Not to Think: The Impact of AI on Critical-Thinking Skills).

Maintaining AI literacy and continuing to question the information you receive from LLMs can help protect your critical thinking skills. Use these tools appropriately and use the right tool for the job to help protect your skills (see below and the How to Study guide for more specific tools).

Copyright Violation

A major ethical consideration is the source of information used in the training datasets, which includes massive amounts of information scraped from the internet. This information may include copyrighted material, sensitive personal details, or proprietary data (The Ethical Implications of Large Language Models in AI). Although a recent lawsuit against Meta found that the output from its LLM are transformative enough for copyrighted material to be considered fair use (Meta fends off authors’ US copyright lawsuit over AI), another judge did warn that there would be many scenarios in which using copyrighted material without explicit consent by the copyright holder would be considered unlawful.

Consider the source of information these tools are drawing on and how you might contribute to this problem. Be cautious when uploading information (like PDFs) that might be covered by copyright or any proprietary data to LLMs. If resources offer their own AI tools, like research assistance tools found through ProQuest databases, use these rather than turning to models owned by private companies like OpenAI, Anthropic, etc.

Biases

Another concern is with biases in the outputs provided by LLMs. As the results come from algorithms developed by human programmers, there may be underlying biases originating in the underlying programming. In their article “Is ChatGPT biased?,” OpenAI admits that ChatGPT is not free from biases and stereotypes, and can in fact reinforce a user’s pre-existing biases due to the nature of its dialogue. An August 2025 article published in the Journal of Healthcare Informatics Research, Socio-Demographic Modifiers Shape Large Language Models’ Ethical Decisions, studied how nine open-source LLM models modified their medical ethical decisions based on socio-economic indicators. Each of the models studied altered ethical choices based on socio-economic cues. While the study used synthetic data that may have affected the results, it is important to recognize that these biases exist, especially as AI is used to make decisions around triage and patient prioritization.

For an extreme example:

The modifications made to Grok, the proprietary LLM from X/Twitter’s xAI, earlier this summer also demonstrate how susceptible these models are to human influence. After having the base code altered, the model made incredibly anti-semitic comments. Most examples of bias are not going to be this obvious, but it is helpful to remain cognizant of the potential for more subtle bias to be present in the output from these tools.

Environment

AI is being used to tackle some massive technological problems, and some researchers hope that it will be able to find solutions to the current environmental crisis facing the world. However, use of these technologies (and generative AI in particular) have a negative impact on the environment themselves. The data centers that host AI servers consume huge amounts of water used for cooling (AI has an environmental problem. Here’s what the world can do about that.), which can affect local water supplies. The amount of electricity required to run the data centers requires power from “fossil fuel-based power plants” (per MIT’s Explained: Generative AI’s environmental impact). Researchers estimate that a single query in ChatGPT uses five times more electricity than an internet search (per MIT’s Explained: Generative AI’s environmental impact). The servers also require the use of rare earth minerals to operate hardware, which are often mined in unsustainable ways and are frequently not recycled effectively, contributing to additional pollution (from Yale School of the Environment’s Can We Mitigate AI’s Environmental Impacts?).

It is important to be mindful of these factors when using generative AI, while recognizing that it is possible for further progress to be made in reducing the environmental impacts in the future.

Data Privacy

Due to how relatively new generative AI technology is and the speed at which it is evolving, there is little regulation in how user data is collected and how the companies running these tools use that data.

OpenAI’s Privacy Policy states that they will collect personal data from users, including account information (such as name, date of birth, contact information), user content like prompts and uploads, and any communications with the company. That personal data is used to improve their services and is retained for varying amounts of time depending on different factors. If you are concerned about your personal data, keep in mind how different companies treat your data and explore their privacy policies individually.

Use it or Lose It

While there are many benefits to using LLMs, it is important to remember that the brain is a muscle – if you don’t use it, you can lose skills you have gained throughout your educational experience.

A recent study published in The Lancet Gastroenterology and Hepatology demonstrated that a cancer detection AI is extremely successful in detecting tumorous growths. However, the doctors that used this technology reduced their own accuracy by 6% compared to their pre-experiment detection rates. While these technologies can allow us to make significant strides in different research fields, this study highlights the importance of maintaining our skills independent of AI.

It is important to understand that you need to have a solid basis in the skills you’re using AI for or you risk deskilling – losing abilities you previously developed. Make sure that you can do any of the tasks you use AI tools for and maintain your knowledge base over time.

Evaluating AI Models

When evaluating information about an AI tool, there is a simple test developed by the LibrAIry team to assist you in analyzing writings and the tools themselves, called the ROBOT Test. (Hervieux & Wheatley, 2020).

ROBOT Test

- Reliability

- How reliable is the information available about the AI technology?

- If it’s not produced by the party responsible for the AI, what are the author’s credentials? Bias?

- If it is produced by the party responsible for the AI, how much information are they making available?

- Is information only partially available due to trade secrets?

- How biased is they information that they produce?

- Objective

- What is the goal or objective of the use of AI?

- What is the goal of sharing information about it?

- To inform?

- To convince?

- To find financial support?

- Bias

- What could create bias in the AI technology?

- Are there ethical issues associated with this?

- Are bias or ethical issues acknowledged?

- By the source of information?

- By the party responsible for the AI?

- By its users?

- Owner

- Who is the owner or developer of the AI technology?

- Who is responsible for it?

- Is it a private company?

- The government?

- A think tank or research group?

- Who has access to it?

- Who can use it?

- Type

- Which subtype of AI is it?

- Is the technology theoretical or applied?

- What kind of information system does it rely on?

- Does it rely on human intervention?

Prompt Engineering

A large part of using LLMs properly is being able to craft prompts that will return the information you want, with the appropriate scope, and with the correct level of detail necessary for a situation. Prompt engineering refers to the process of building effective queries in a generative AI tool to return the desired results.

Types of Prompts

There are several types of prompts used in generative AI that can be applied based on what you are asking the tool to do. Google Cloud’s Prompt engineering: overview and guide outlines the basic types of prompts and provides examples of effective prompts to receive quality outputs.

- Direct prompt/zero-shot – asking a direct question without additional context or examples, like requesting a summary of content or asking the tool to brainstorm

- One-, few-, and multi-shot prompts – including multiple examples of desired input-output pairs prior to the actual prompt to demonstrate the kind of output expected

- Chain of Thought prompts – directing the tool to provide an explanation of the logical steps used to return the output, providing more insight into the methodology used and ability to influence the final structure

- Zero-shot Chain of Thought prompts – combining zero-shot and Chain of Thought prompting by inputting a direct instruction with a request for a breakdown of the steps taken

CLEAR Framework

The CLEAR Framework has been developed to optimize the effectiveness of models like ChatGPT to return the best possible results (Artificial Intelligence for Research and Scholarship):

- Concise – prompts should be short and to the point, with any extraneous information trimmed

- Logical – there should be a natural, logical progression of ideas to develop the context around the prompt and build connections between ideas necessary for the output

- Explicit – specify the expected output as far as format, content, and/or scope

- Adaptive – be flexible with your prompt phrasing and structures as you find what works and what does not

- Reflective – continually assess the prompts you use and your prompting techniques based on the responses you receive to improve future prompts

Anthropic Recommendations

Anthropic, the developer of LLM Claude, provides the following steps for how to engineer your prompts:

- Prompt generator – use the provided prompt generator to input your first prompt, as it can be difficult to figure out how to get started

- Clear and direct – give explicit instructions with contextual information to make your desired output easy to align with (bulleted lists or numbered steps can be helpful)

- Examples – providing examples of the desired output helps LLMs like Claude to develop the most effective responses because they can help with accuracy, consistency, and performance

- Chain of Thought – asking the model to break down problems into sequential steps is beneficial for analysis and problem-solving, reducing errors in the response and providing more organized outputs

- Use XML tags – including XML tags like <example> and <formatting> can improve the clarity of your request and help structure the output so you can extract relevant information more easily

- Role – include the role you want the model to take, clarifying the perspective the answer should be given from, to make responses more accurate and return a tone that will be appropriate for the final audience

- Chain complex prompts – rather than crafting a highly detailed prompt with multiple steps, break the prompts down into smaller requests that build on subsequent responses (this can make it easier to identify any errors in the results)

More Resources for Prompting

- Prompt engineering techniques – from IBM

- Advanced Prompt Engineering for Data Science Projects – from Towards Data Science

- Prompt Engineering Guide – by DAIR.AI

- ChatGPT Prompt Engineering for Developers – course from DeepLearning.AI

- Prompt Engineering Guide – by Learn Prompting

- Prompt Engineering: From Words to Art and Copy – from Saxifrage.xyz

- Effective Prompts for AI: The Essentials – from MIT Management’s AI Basics

- Prompt Literacy – from Florida International University Libraries’ Artificial Intelligence Now: ChatGPT + AI Literacy Toolbox

If you need some tools for effective studying or suggestions on how to include generative AI in your study process, check out the How to Study guide.

AI Tools for Research

While ChatGPT, Claude, and other LLMs seem like the ideal tool for any research project, they each have inherent limitations because they were not designed specifically for these tasks and draw on very large training datasets.

Regardless of the tool you use, do not upload PDFs or other articles from subscription databases, journals, or information from books to any AI tools. Doing so is a violation of the terms of use agreements in place with vendors.

The Right Tool for the Job

These are some programs that you can use in the research process for specific purposes:

- Semantic Scholar – over 200 million science-based academic papers and conference reports with AI-powered summaries, citation impact-factor calculations, citations, and recommendations for additional research

- Elicit – summarizes papers, extracts data, and synthesizes findings from the academic papers in Semantic Scholar

- Paid and free versions

- May still return inaccurate information

- Scite – input a research question and receive abstracts and/or citations from papers related to the question

- Includes the searches used to gather results, which is necessary for citing AI-generated content

- ResearchRabbit – citation-based literature mapping tool to find articles similar to ones you’ve already found, which connects to Zotero for exporting citations

- Inciteful.xyz – like ResearchRabbit, finds connected articles based on the DOI or title of a seed article, or finds the connection between two articles

- NotebookLM – upload PDFs (open-source only!), websites, and other documents and receive summaries and connections between subject; does not train their model on uploaded documents or personal data

- Can create podcasts based on your subject

- Google product

- Perplexity AI – a real-time, AI-powered search engine prioritizing accurate, with citations for traceable information verification

- Concensus – an AI research tool using a database of more than 200 million peer-reviewed research articles

- Requires creating an account

- Free and paid versions, including a 3-month discount for students with a valid school email

- Follow Ithaka S+R’s Generative AI Product Tracker for updated information of products marketed towards post-secondary audiences for teaching, learning, or research activities

More Resources

AI Literacy is an important consideration right now in all aspects of education and for employees expected to use these tools effectively. These resources, mainly from libraries and professionals researching the best use of these tools, can help you continue developing knowledge around artificial intelligence. Keep in mind that the field is changing quickly, so it’s important to actively engage in updates.

Other Resources

- AI Tools and Resources – University of South Florida LibGuide

- AI Literacy as Information Literacy – from the University of North Dakota’s Artificial Intelligence Research Guide

- AI Literacy – Sacramento State University Library’s Research Guide

- AI Literacy – from the University of Calgary’s Artificial Intelligence Research Guide

- Artificial Intelligence and Information Literacy – University of Maryland