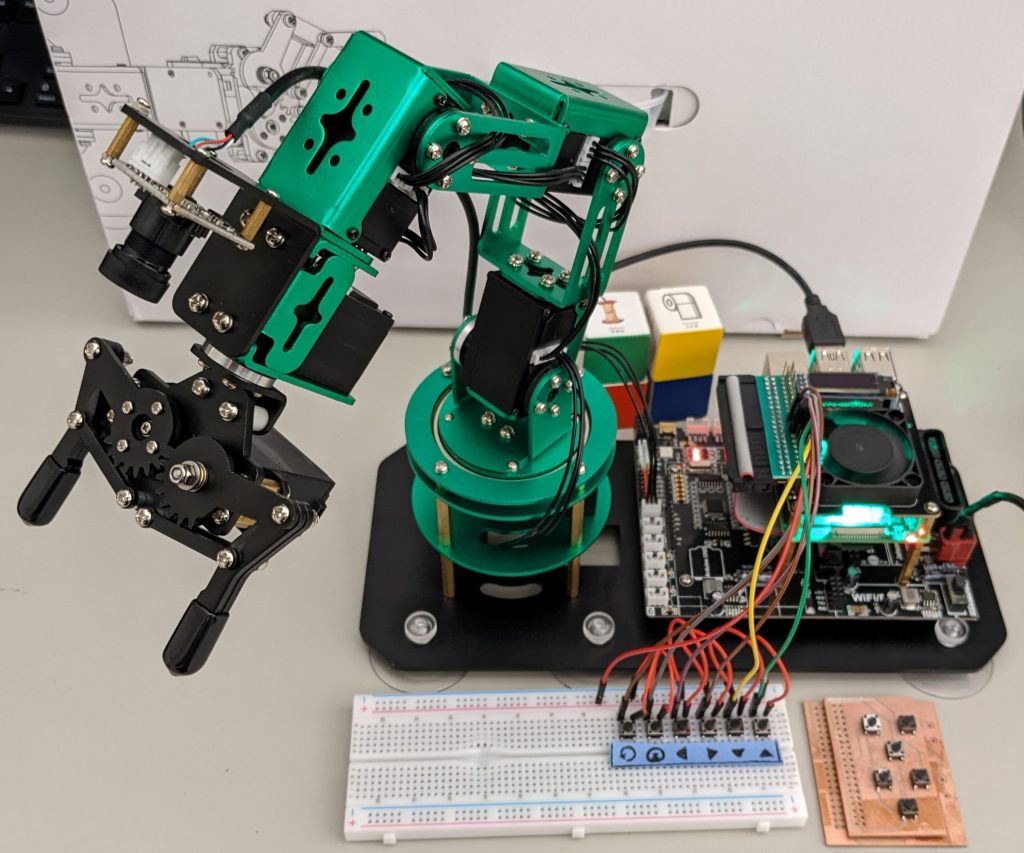

A brain-computer interface robotic arm named the BCI-Arm was developed as an assistive device for performing general motor tasks. The BCI-Arm uses an Emotiv EPOC X headset to collect electroencephalography (EEG) signals generated by the brain as a controller for a mounted robotic arm. The user is able to move the BCI-Arm through a series of pre-guided motions by training the system to respond to a sequence of mental commands correlating to different motions. This device would be able to assist an individual by allowing them to pick up and move objects on a surface using only their mental activity.

Beyond edge computing, we also study the application of classical computational intelligence to extract important health information from the raw data and sensor signals. In the first stage of the development of the Brain-Computer Interface, we collected multiple EEG datasets and applied computer algorithms for automatic detection and classification. We are also collaborating with Prof Ali Boolani and Prof Kwadwo Appiah-Kubi to extract useful gait, emotion, fatigue, and balance information from wearable inertial sensors, electromyography data, etc.

Lab Publications:

C2. Garrett Stoyell, Anthony Seybolt, Thomas Griebel, Siddesh Sood, Md Abdul Baset Sarker, Abul Khondker, Masudul Imtiaz. The Mind-Controlled Wheelchair. 2022 ASEE St. Lawrence Section Annual Conference.

C1 E. Ketola, C. Lloyd, D Shuhart, J. Schmidt, R. Morenz, A. Khondker, M. Imtiaz. Lessons Learned From the Initial Development of a Brain Controlled Assistive Device, IEEE CCWC, 2022.