Vision-Enabled Pediatric Prosthetic Hand

Empowering children with advanced, low-cost prosthetics.

Our research is to present an ‘AI vision’ based digital design of the control hardware for a novel pediatric prosthesis to assist children with upper limb disabilities. This prosthetic hand will have an anthropomorphic appearance, soft structure, multi-articulating functionality for grasping a wide range of objects, and lower weight with a size similar to the natural hand of the target population: children aged 5-10 years old. This machine-vision-based system-on-an-FPGA research has immense societal importance as children in their early and middle childhood with limb loss are underserved with current prosthetic hand options. The constant growth of children requires a frequent replacement of their hand prostheses, which, due to the high cost of commercial prostheses, is not affordable for many families. The majority of prostheses available to them are myoelectric-based and priced at around 14,000 USD. Our goal is to present an alternative (vision-controlled) but smart prosthetic hand customizable for a range of body/arm sizes. At the same time, we seek to maintain a low cost and power budget to maintain accessibility for third-world countries.

Introduction to the Problem: Every year, children with congenital or acquired limb loss face significant physical and emotional challenges due to a lack of affordable prosthetics. Commercial prostheses, while functional, are often out of reach for many families due to costs that can exceed $14,000. Furthermore, existing solutions like myoelectric prostheses come with technical limitations that hinder mass adoption.

Our Solution:

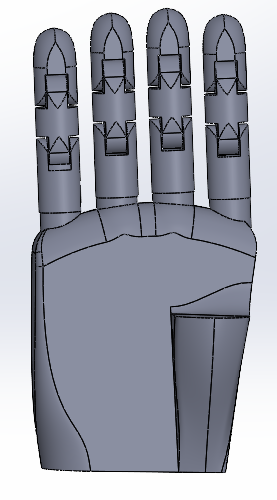

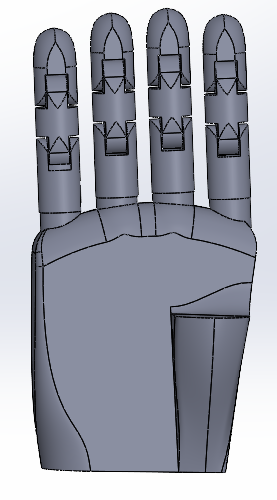

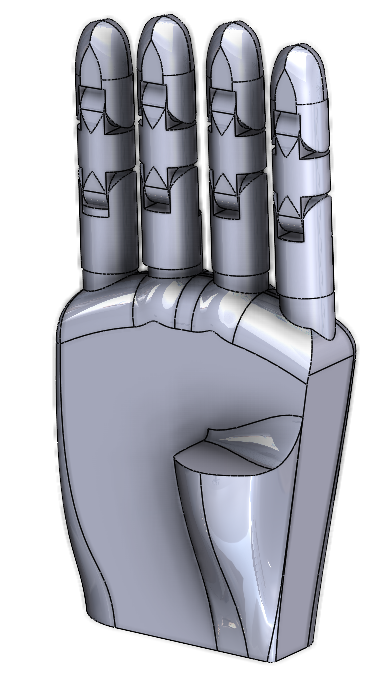

Our team has developed a prosthetic hand designed specifically for children aged 4-10, integrating AI-based vision control. With its lightweight, customizable, and 3D-printed design, this hand offers a functional, affordable, and scalable alternative to conventional prostheses. By leveraging machine vision and sensors, our design allows for real-time object detection and automatic grasping without the need for extensive user training.

- AI Vision Control: A wrist-mounted camera provides real-time object detection and distance approximation for precise grasping.

- Low-Cost 3D Printing: The hand is made using thermoplastic polyester (PLA) and TPU, ensuring both flexibility and durability.

- Power Efficiency: Using an FPGA-based control system reduces overall power consumption, making it affordable and accessible even in resource-constrained environments.

Design & Materials

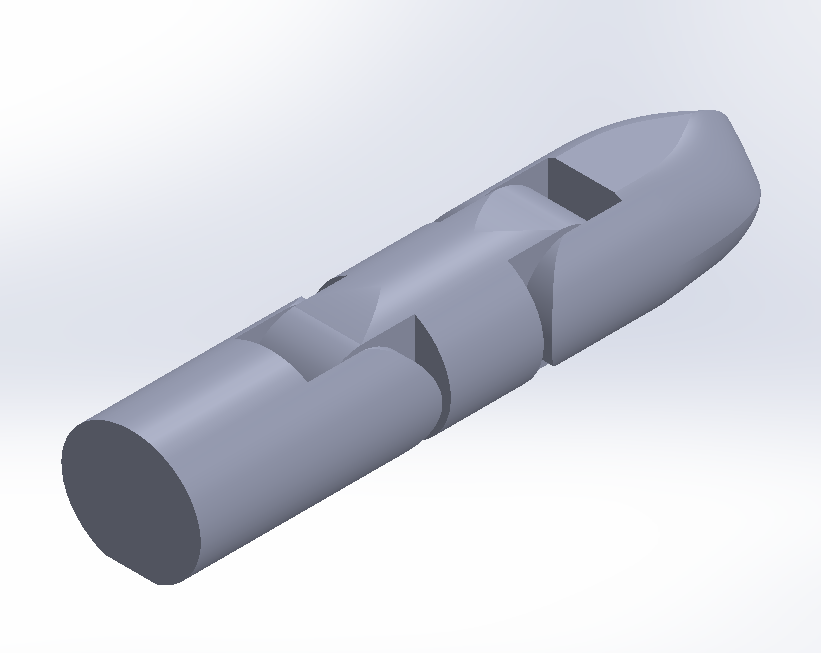

Our prosthetic hand is 3D-printed in-house using a combination of PLA and TPU, creating a soft, flexible structure. This allows for a lightweight design while ensuring the hand can handle the physical stresses of daily use. TPU’s flexibility is used in key areas like the fingertips to enhance the hand’s grasping ability, while PLA offers durability in other structural parts.

Actuation & Control

The prosthetic hand uses three degrees of actuation to replicate the movements required for everyday activities. The hand’s movements are controlled by an AI vision system embedded within the wrist, which uses a micro camera to detect objects in real time. This system enables precise, automatic grasping, allowing children to use the prosthesis with minimal training.

Real-World Testing

Our prototypes have undergone rigorous lab testing, with real-world testing set to begin soon. Certified prosthetists and clinicians are currently reviewing the design, and we will begin testing with a small group of children with upper limb differences. The prosthetic is designed to be customizable to accommodate the child’s growth, ensuring long-term use without requiring frequent replacement.

The Research Team & Collaborators

PI Imtiaz

With over a decade of experience in machine vision and sensor development, PI Imtiaz leads the research team at Clarkson University. His expertise in designing low-power AI systems has resulted in numerous innovations, from wearable sensors to heart rate monitors. He is currently applying these principles to prosthetic development, creating a robust, vision-enabled control unit for pediatric prostheses.

CoPI Kevin Fite

Kevin Fite brings a wealth of experience in assistive technologies, specifically in designing and controlling upper-extremity prosthetic limbs. His research at the Laboratory for Intelligent Automation focuses on low-cost, customizable solutions for individuals with limb loss. Kevin has been instrumental in guiding the development of this prosthetic hand’s mechanical and actuation system.

Collaborators: This project is a collaboration between Clarkson University’s AI Vision Lab, Lab for Intelligent Automation, and the Center for Advanced PCB Design and Manufacture.

Upon detecting an object, the Time-of-Flight (TOF) sensor (VL6180x) accurately calculates its distance. If the object is within 70-90mm of the hand, a “Hand Close” signal is sent to the motor controller to initiate the closing action. As the hand closes, pressure sensors provide feedback, and if the pressure exceeds a predefined threshold, the closing motion halts to prevent overexertion. Once the hand is fully closed, the accelerometer (ADXL345) is activated, awaiting a specific gesture. Upon detecting the gesture, the system sends a “Hand Open” signal to the motor controller to reopen the hand. A video demonstration of how the previous design functions is shown below.

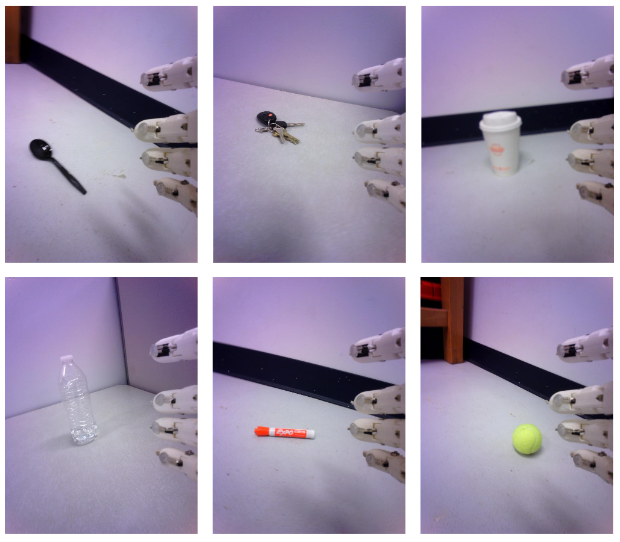

Image Collection. To develop highly efficient deep neural networks, initially, 2000 images were captured from the wrist position to train on a computer before customization for a resource constraint processor. This pilot study has captured images of six object classes (ball, cup, bottle, pen, spoon, keys); more image collection is required for a variety of object classes (commonly encountered in daily life) under different lighting conditions and backgrounds, etc. This data collection may not require the true amputee population. After image collection, similar to the pilot study, a manual image review will be performed by two individuals to verify whether the cameras are capable of capturing images under all lighting conditions, including in dim environments.